Fundamentals

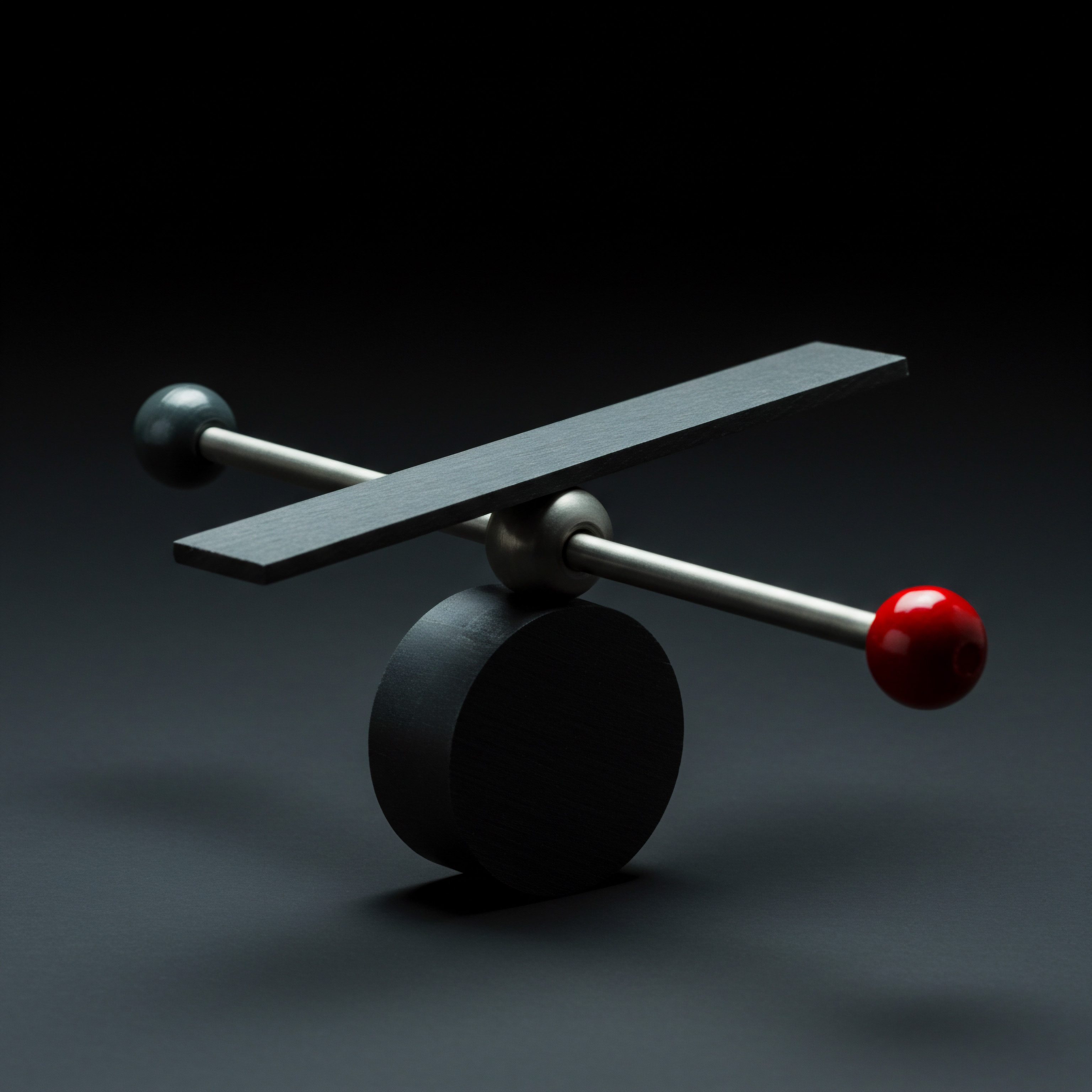

Imagine a local bakery, eager to streamline its operations with AI, perhaps for managing online orders or personalizing customer recommendations. They are not thinking about societal bias baked into algorithms; they are thinking about efficiency and growth. Yet, even at this micro-level, the question of systemic AI fairness Meaning ● Systemic AI Fairness, as it applies to Small and Medium-sized Businesses, denotes the comprehensive and integrated approach to ensuring that artificial intelligence systems deployed for business growth, automation, and implementation initiatives do not perpetuate or amplify biases across the entirety of their lifecycle. looms, often unseen, like yeast in dough ● essential, but easily overlooked until the bread fails to rise properly.

For small to medium businesses (SMBs), the abstract concept of ‘systemic AI fairness’ can feel distant from daily concerns of payroll and profit margins. However, fairness is not just a corporate social responsibility checkbox; it is woven into the very fabric of sustainable business practice, impacting everything from customer trust to long-term viability.

Starting Simple Metrics

For an SMB just beginning to consider AI, the idea of ‘metrics’ might conjure images of complex dashboards and data scientists. Forget that for a moment. Start with what is intuitively understandable ● Data Representation. Does the data used to train the AI actually reflect the customer base?

If the bakery’s online ordering system is trained only on data from customers who order through a specific app, it misses a huge chunk of potential customers who prefer phone orders or walk-ins. This skewed data creates an unfair system, unintentionally prioritizing one customer segment over another. Another straightforward metric is Output Transparency. Can the AI’s decisions be explained in plain language?

If the AI rejects a loan application for a small business, is the reason given a cryptic algorithm output, or a clear, justifiable explanation that the business owner can understand and potentially address? Transparency builds trust, and trust is the bedrock of SMB success.

Understanding Data Skew

Data skew is not some academic term; it is a practical problem every SMB faces. Think about customer reviews. Are you only paying attention to online reviews, missing feedback from in-person interactions? Online reviews tend to be polarized ● either extremely positive or negative ● and might not represent the average customer experience.

This creates a skewed dataset. If an AI system uses only online reviews to assess customer satisfaction, it will get a distorted picture, leading to potentially unfair or misguided business decisions. Metrics for data skew are not about advanced statistics; they are about common sense. Look at Your Data Sources.

Are they diverse? Do they capture the full spectrum of your customer base and business operations? If not, your AI system, no matter how sophisticated, will inherit and amplify these biases.

Fairness in Automation

Automation is the promise of AI for SMBs Meaning ● AI for SMBs signifies the strategic application of artificial intelligence technologies tailored to the specific needs and resource constraints of small and medium-sized businesses. ● doing more with less. But automation without fairness baked in can lead to unintended consequences. Consider an automated hiring system. If the system is trained on historical hiring data that reflects past biases ● for example, if previous hiring managers unconsciously favored candidates from certain backgrounds ● the AI will perpetuate these biases, even if unintentionally.

Metrics for fairness in automation Meaning ● Fairness in Automation, within SMBs, denotes the ethical and impartial design, development, and deployment of automated systems, ensuring equitable outcomes for all stakeholders, including employees and customers, while addressing potential biases in algorithms and data. start with Audit Trails. Can you track how the AI makes decisions in automated processes? Is there a record of the data used, the algorithms applied, and the outputs generated? Audit trails are not just for compliance; they are essential for identifying and correcting unfairness in automated systems.

Another key metric is Human Oversight. Automation should augment human capabilities, not replace human judgment entirely, especially when fairness is at stake. Are there human checkpoints in automated processes to review AI decisions and ensure fairness, particularly in sensitive areas like hiring or customer service?

For SMBs, systemic AI fairness begins with understanding data representation, output transparency, and fairness in automation, focusing on practical metrics like data skew and audit trails.

Practical Steps for SMBs

Addressing systemic AI fairness does not require a massive overhaul. Small, consistent steps can make a significant difference. Start with a Data Diversity Checklist. When collecting data for AI applications, actively seek diverse sources and perspectives.

If you are a restaurant using AI for menu recommendations, ensure your data includes feedback from all types of diners, not just those who leave online reviews. Implement Explainable AI (XAI) Tools, even in their simplest forms. Many AI platforms offer features that provide insights into how decisions are made. Use these tools to understand the reasoning behind AI outputs and identify potential fairness issues.

Regularly conduct Fairness Reviews of your AI systems, even if it is just a simple team discussion. Ask critical questions ● Could this AI system unintentionally disadvantage any customer group? Are there any blind spots in our data or algorithms? These proactive steps, grounded in practical metrics, are the foundation of systemic AI fairness for SMBs.

Ignoring fairness in AI is like ignoring basic accounting principles. It might seem manageable in the short term, but eventually, it catches up, leading to financial instability or reputational damage. Systemic AI fairness, therefore, is not a luxury; it is a fundamental business imperative, even for the smallest enterprise. By starting with simple metrics and practical steps, SMBs can build a foundation for responsible and sustainable AI adoption, ensuring that technology serves to uplift, not undermine, their business and their communities.

Navigating Bias Landscapes

The initial allure of AI for SMBs often centers on efficiency gains and cost reduction, a siren song promising streamlined operations and enhanced profitability. Yet, beneath this enticing surface lies a more complex reality ● the potential for AI systems to inadvertently amplify existing societal biases, creating unfair outcomes even as they optimize processes. Consider a local e-commerce store using AI to personalize product recommendations.

If the AI algorithm is trained on historical sales data that disproportionately reflects the purchasing habits of one demographic group, it might systematically under-recommend products to other customer segments, limiting their choices and perpetuating a subtle form of market exclusion. For SMBs moving beyond basic AI applications, understanding and mitigating these ‘bias landscapes’ becomes crucial, demanding a shift from simplistic metrics to more nuanced and strategic approaches.

Moving Beyond Surface Metrics

While data representation and output transparency are essential starting points, they are insufficient for addressing systemic AI fairness at an intermediate level. The focus must expand to encompass Algorithmic Bias and Impact Assessment. Algorithmic bias Meaning ● Algorithmic bias in SMBs: unfair outcomes from automated systems due to flawed data or design. refers to the inherent biases that can creep into AI models during training, often stemming from flawed data or biased algorithm design. Metrics for algorithmic bias involve techniques like Disparate Impact Analysis, which examines whether an AI system disproportionately affects certain demographic groups.

For example, if an automated loan application system has a significantly higher rejection rate for minority-owned businesses compared to non-minority-owned businesses, this indicates disparate impact Meaning ● Disparate Impact, within the purview of SMB operations, particularly during growth phases, automation projects, and technology implementation, refers to unintentional discriminatory effects of seemingly neutral policies or practices. and potential algorithmic bias. Impact assessment, on the other hand, goes beyond statistical disparities to evaluate the real-world consequences of AI decisions. Metrics here are qualitative as well as quantitative, considering the Social and Economic Ramifications of unfair AI outcomes on customers, employees, and the broader community.

Statistical Parity and Beyond

Statistical parity, also known as demographic parity, is a commonly used metric in fairness discussions. It aims for equal outcomes across different groups. In the context of AI, statistical parity would mean that the proportion of positive outcomes (e.g., loan approvals, job offers) should be roughly the same for all demographic groups. However, statistical parity has limitations.

Focusing solely on equal outcomes can sometimes overlook underlying inequalities or create reverse discrimination. A more sophisticated approach involves considering Equality of Opportunity and Equality of Outcome as distinct but related concepts. Equality of opportunity focuses on ensuring that all groups have an equal chance to achieve a positive outcome, regardless of their background. Metrics for equality of opportunity might involve examining the fairness of input features used in AI models, ensuring that they are not proxies for protected characteristics like race or gender.

Equality of outcome, while related to statistical parity, emphasizes equitable results in a broader sense, considering not just numerical parity but also the quality and fairness of those outcomes. Moving beyond statistical parity requires a more holistic set of metrics that capture both opportunity and outcome fairness.

Bias in Data and Algorithms

Bias can enter AI systems at various stages, from data collection and preprocessing to algorithm design and deployment. Data Bias is perhaps the most prevalent source of unfairness. If the data used to train an AI model is skewed, incomplete, or reflects existing societal biases, the model will inevitably learn and perpetuate these biases. Metrics for data bias involve analyzing data distributions across different demographic groups, identifying underrepresentation or overrepresentation, and implementing data augmentation or re-weighting techniques to mitigate skew.

Algorithmic Bias, on the other hand, arises from the design of the AI algorithm itself. Certain algorithms might be inherently more prone to bias than others, or specific algorithm parameters might inadvertently amplify unfairness. Metrics for algorithmic bias involve evaluating the fairness properties of different algorithms, using techniques like fairness-aware machine learning, and carefully tuning algorithm parameters to minimize bias without sacrificing performance. Addressing bias requires a multi-pronged approach, tackling both data and algorithmic sources of unfairness.

Intermediate metrics for systemic AI fairness move beyond surface-level assessments to encompass algorithmic bias, impact assessment, and a nuanced understanding of statistical parity, equality of opportunity, and equality of outcome.

SMB Strategies for Bias Mitigation

For SMBs, navigating bias landscapes requires a strategic and proactive approach. Start by conducting a Bias Audit of existing or planned AI systems. This audit should involve a systematic examination of data sources, algorithms, and potential impact on different customer or employee groups. Utilize fairness metrics Meaning ● Fairness Metrics, within the SMB framework of expansion and automation, represent the quantifiable measures utilized to assess and mitigate biases inherent in automated systems, particularly algorithms used in decision-making processes. like disparate impact ratio and equality of opportunity metrics to quantify bias levels.

Implement Fairness-Aware AI Development Practices. This involves incorporating fairness considerations into every stage of the AI development lifecycle, from data collection and preprocessing to model training and deployment. Utilize fairness-aware algorithms and techniques, and regularly monitor AI system performance for fairness drift over time. Establish Ethical AI Guidelines for your SMB.

These guidelines should articulate your commitment to fairness, transparency, and accountability in AI adoption. Clearly define roles and responsibilities for ensuring AI fairness, and establish processes for addressing fairness concerns or complaints. These strategic steps, grounded in intermediate-level metrics and practices, are crucial for SMBs to navigate the complex landscape of AI bias and build fairer, more responsible AI Meaning ● Responsible AI for SMBs means ethically building and using AI to foster trust, drive growth, and ensure long-term sustainability. systems.

Ignoring bias in AI is akin to navigating a business landscape with blinders on. You might move forward efficiently in a narrow path, but you risk stumbling into unforeseen pitfalls and missing broader opportunities. Systemic AI fairness, at this intermediate level, is about expanding your視野, understanding the complexities of bias, and strategically mitigating its impact. It is about building AI systems that are not just efficient but also equitable, fostering trust, and ensuring long-term sustainable growth in an increasingly AI-driven world.

Systemic Fairness Architectures

The initial enthusiasm surrounding AI’s transformative potential for SMBs often overlooks a critical dimension ● the systemic nature of fairness. It is not merely about tweaking algorithms or diversifying datasets; it is about constructing AI systems within a broader ethical and societal framework, recognizing that fairness is not a static endpoint but an ongoing, dynamic process. Consider a fintech SMB utilizing AI for credit scoring.

Even if their algorithm achieves statistical parity on readily available demographic data, it might still perpetuate systemic unfairness if the underlying credit scoring model relies on factors that are themselves proxies for historical or societal disadvantages, such as zip code or access to traditional financial institutions. For SMBs operating at the advanced edge of AI adoption, the challenge shifts from mitigating individual biases to architecting systems that embody systemic fairness, demanding a deep engagement with ethical frameworks, societal impact, and long-term accountability.

Deconstructing Systemic Bias

Systemic bias is not simply the aggregation of individual biases; it is a deeply embedded, often invisible, web of interconnected biases that permeate societal structures and institutions. Addressing systemic AI fairness requires moving beyond individual-level metrics and focusing on Structural Fairness and Intersectional Bias. Structural fairness examines how AI systems interact with and potentially reinforce existing societal inequalities. Metrics for structural fairness involve analyzing the broader societal context in which AI systems operate, considering historical injustices, power imbalances, and systemic discrimination.

This might involve qualitative assessments of the potential for AI to exacerbate existing inequalities in areas like access to opportunity, resource allocation, or social mobility. Intersectional bias recognizes that individuals belong to multiple social groups simultaneously (e.g., race, gender, class) and that biases can compound and interact in complex ways. Metrics for intersectional bias involve disaggregating fairness metrics across multiple intersecting demographic categories, identifying disparities that might be masked when considering only single demographic dimensions. For example, an AI hiring system might appear fair when considering race and gender separately, but reveal significant unfairness when examining the intersection of race and gender, such as for women of color. Deconstructing systemic bias Meaning ● Systemic bias, in the SMB landscape, manifests as inherent organizational tendencies that disproportionately affect business growth, automation adoption, and implementation strategies. demands a multi-dimensional and context-aware approach to fairness metrics.

Causal Fairness and Counterfactual Reasoning

Traditional fairness metrics often focus on correlations and statistical disparities, but they may not capture the underlying causal mechanisms that drive unfair outcomes. Causal Fairness aims to address this limitation by incorporating causal reasoning into fairness evaluations. This involves developing causal models that represent the relationships between AI system inputs, outputs, and potential sources of bias, allowing for a deeper understanding of how AI systems contribute to unfairness. Metrics for causal fairness might involve using techniques like Counterfactual Fairness, which asks ● “Would the AI decision have been different if the individual belonged to a different demographic group, holding all other factors constant?” Counterfactual fairness helps to isolate the causal effect of protected attributes on AI outcomes, providing a more nuanced assessment of fairness than purely correlational metrics.

Another related concept is Path-Specific Counterfactual Fairness, which focuses on specific causal pathways through which bias might propagate. For example, in a credit scoring system, bias might arise not just from direct discrimination based on race, but also indirectly through factors like neighborhood, which are themselves causally linked to historical racial segregation. Causal fairness metrics offer a more rigorous and insightful approach to evaluating systemic AI fairness.

Fairness Audits and Ethical Frameworks

Ensuring systemic AI fairness requires not just technical metrics but also robust governance mechanisms and ethical frameworks. Fairness Audits are systematic evaluations of AI systems to assess their fairness properties and identify potential biases. Advanced fairness audits go beyond simple metric calculations to involve qualitative assessments, stakeholder consultations, and ethical reviews. They might incorporate techniques like Adversarial Audits, where independent experts attempt to find vulnerabilities or biases in AI systems, and Participatory Audits, where affected communities are involved in the audit process.

Ethical frameworks provide overarching principles and guidelines for responsible AI development and deployment. Frameworks like the Belmont Report principles of respect for persons, beneficence, and justice, or the OECD Principles on AI, offer a broader ethical compass for navigating complex fairness dilemmas. Metrics for ethical alignment involve assessing the extent to which AI systems and organizational practices adhere to these ethical frameworks, considering not just technical fairness but also broader ethical values like human dignity, autonomy, and social justice. Fairness audits and ethical frameworks Meaning ● Ethical Frameworks are guiding principles for morally sound SMB decisions, ensuring sustainable, reputable, and trusted business practices. are essential components of a systemic fairness architecture.

Advanced metrics for systemic AI fairness delve into structural fairness, intersectional bias, causal fairness, counterfactual reasoning, and the integration of fairness audits and ethical frameworks.

Building Systemic Fairness into SMB Operations

For SMBs committed to advanced AI adoption, building systemic fairness requires a holistic and long-term approach. Establish a Cross-Functional AI Ethics Committee, bringing together diverse perspectives from across the organization, including technical experts, business leaders, and representatives from affected communities. This committee should be responsible for overseeing AI fairness initiatives, developing ethical guidelines, and conducting regular fairness audits. Implement a Continuous Fairness Monitoring System, going beyond one-time audits to track AI system performance for fairness drift over time.

Utilize advanced fairness metrics and techniques, and establish feedback loops to continuously improve AI fairness based on ongoing monitoring and evaluation. Engage in Stakeholder Dialogue, actively seeking input from customers, employees, and community groups who might be affected by your AI systems. Incorporate diverse perspectives into AI design and deployment processes, and establish mechanisms for addressing fairness concerns and complaints. These systemic steps, grounded in advanced fairness metrics and ethical principles, are crucial for SMBs to build truly fair and responsible AI systems that contribute to a more equitable and just society.

Ignoring systemic fairness in AI is like building a business on a foundation of sand. It might appear solid in the short term, but it is vulnerable to collapse under the weight of societal scrutiny, ethical challenges, and long-term reputational risks. Systemic AI fairness, at this advanced level, is about building a robust and resilient business, grounded in ethical principles and a deep commitment to social responsibility. It is about leveraging AI not just for profit maximization, but for creating positive societal impact, fostering trust, and ensuring a sustainable and equitable future for your business and the communities you serve.

References

- Barocas, S., Hardt, M., & Narayanan, A. (2019). Fairness and machine learning ● Limitations and opportunities. MIT Press.

- Holstein, K., Friedler, S. A., Narayanan, V., Choudhary, R., Radin, J., & Crawford, K. (2019). Datasheets for datasets. Communications of the ACM, 62(2), 48-55.

- Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A survey on fairness in machine learning. ACM Computing Surveys (CSUR), 54(6), 1-35.

Reflection

Perhaps the most unsettling truth about systemic AI fairness is that it cannot be fully achieved through metrics alone. Metrics provide a valuable lens, quantifying disparities and highlighting potential biases, yet they are inherently reductive, capturing only a partial view of a deeply complex and evolving landscape. The pursuit of perfect fairness metrics can become a distraction, shifting focus from the messy, human-centered work of building truly equitable systems to a technical exercise in optimizing numbers.

Systemic fairness, in its most profound sense, demands a constant questioning of assumptions, a willingness to confront uncomfortable truths about societal biases, and a commitment to ongoing dialogue and adaptation. It is not a destination to be reached, but a continuous journey of ethical reflection and action, requiring businesses to move beyond mere compliance and embrace a deeper, more humanistic understanding of fairness in the age of AI.

Systemic AI fairness metrics Meaning ● AI Fairness Metrics, in the context of SMB growth, automation, and implementation, signify the measurements used to assess and mitigate bias in AI-powered systems. for SMBs reflect data diversity, algorithmic transparency, bias audits, and ethical AI Meaning ● Ethical AI for SMBs means using AI responsibly to build trust, ensure fairness, and drive sustainable growth, not just for profit but for societal benefit. guidelines.

Explore

What Business Metrics Truly Reflect Systemic AI Fairness?

How Can SMBs Practically Implement Systemic AI Fairness Metrics?

Why Is Systemic AI Fairness Crucial for Long-Term SMB Growth and Sustainability?