Fundamentals

Ninety percent of Fortune 500 companies use some form of automated recruiting technology, yet the small business, the lifeblood of most economies, often lags, clinging to gut feelings and inherited biases, unaware that even their nascent forays into automation can amplify existing inequalities in hiring.

Unseen Bias in Digital Tools

Small businesses, in their pursuit of efficiency, are increasingly turning to algorithmic tools for hiring. These tools, ranging from resume screening software to AI-powered interview platforms, promise to streamline the recruitment process, saving time and resources. However, the data that fuels these algorithms can inadvertently encode societal biases, leading to discriminatory outcomes, even when no malicious intent exists. It’s not about intentionally discriminatory algorithms; rather, it’s about the subtle, often invisible ways bias creeps into the data used to train them.

Data Points Revealing Algorithmic Prejudice

Several key business data Meaning ● Business data, for SMBs, is the strategic asset driving informed decisions, growth, and competitive advantage in the digital age. points can signal algorithmic bias Meaning ● Algorithmic bias in SMBs: unfair outcomes from automated systems due to flawed data or design. in SMB hiring Meaning ● SMB Hiring, in the context of small and medium-sized businesses, denotes the strategic processes involved in recruiting, selecting, and onboarding new employees to support business expansion, incorporating automation technologies to streamline HR tasks, and implementing effective workforce planning to achieve organizational objectives. practices. Analyzing these metrics can provide a clear picture of where and how bias might be manifesting, allowing for proactive intervention and fairer hiring processes.

Demographic Skews in Applicant Pools

One of the most immediate indicators is a noticeable demographic skew in applicants who make it past the initial algorithmic screening stage. If your applicant tracking system data reveals that candidates from certain demographic groups (e.g., based on gender, race, age, or even zip code) are disproportionately filtered out before human review, this is a major red flag. For example, if your algorithm is trained on historical hiring data that, unbeknownst to you, favored male candidates for technical roles, the system might learn to penalize resumes from female applicants, even if they possess equal or superior qualifications. This isn’t necessarily a conscious decision by the algorithm; it’s a reflection of patterns in the data it was fed.

A skewed applicant pool, evident in demographic data, suggests algorithmic filters may be unintentionally replicating historical biases.

Keyword Optimization Gone Wrong

Algorithms often rely heavily on keyword matching to sift through resumes and applications. While seemingly objective, the choice of keywords and their relative weighting can introduce bias. Consider job descriptions that inadvertently use gendered language or terms more commonly associated with specific racial or ethnic groups. If your algorithm prioritizes resumes containing these biased keywords, it will naturally favor candidates from the dominant demographic, not because of their skills, but because of linguistic patterns in their application materials.

Analyzing the frequency and type of keywords that trigger positive and negative scores in your algorithmic screening can reveal unintended biases baked into the system’s logic. This isn’t about malicious keywords; it’s about the subtle ways language can reflect and perpetuate societal stereotypes.

Performance Data Discrepancies Post-Hire

Another critical data point lies in post-hire performance evaluations. If you observe statistically significant differences in performance ratings or promotion rates between demographic groups hired through algorithmic processes versus those hired through traditional methods, it warrants closer inspection. It could indicate that the algorithm, while appearing efficient in initial screening, is not actually selecting the most capable candidates across all demographics.

Perhaps the algorithm is optimizing for traits correlated with a specific demographic group, but not necessarily for overall job success in a diverse workforce. Examining performance review data, promotion timelines, and even attrition rates across different hiring cohorts can expose disparities introduced by algorithmic selection.

Geographic Filtering and Socioeconomic Bias

Algorithms sometimes incorporate geographic data, such as applicant zip codes, into their decision-making. While seemingly innocuous, this can perpetuate socioeconomic bias. If an algorithm penalizes applicants from certain zip codes based on assumptions about the quality of education or work ethic in those areas, it’s effectively discriminating against individuals based on their place of residence, which often correlates with socioeconomic status and racial demographics.

Analyzing the geographic distribution of candidates filtered out by the algorithm, and comparing it to broader socioeconomic data for those regions, can reveal hidden biases linked to location. This isn’t about geography itself being biased; it’s about how geographic data can become a proxy for other, more sensitive attributes.

Sentiment Analysis and Cultural Nuances

Some advanced hiring algorithms incorporate sentiment analysis Meaning ● Sentiment Analysis, for small and medium-sized businesses (SMBs), is a crucial business tool for understanding customer perception of their brand, products, or services. to assess the tone and language used in resumes, cover letters, or even video interviews. However, sentiment analysis algorithms are often trained on datasets that predominantly reflect Western cultural norms and linguistic patterns. This can lead to biased interpretations of communication styles from individuals from different cultural backgrounds. For instance, direct communication styles, common in some cultures, might be misconstrued as aggressive or overly assertive by an algorithm trained on data that values indirectness and politeness.

Analyzing sentiment scores assigned to applicants from diverse cultural backgrounds, and comparing them to human assessments of the same materials, can highlight cultural biases embedded in sentiment analysis tools. This isn’t about sentiment analysis being inherently flawed; it’s about the need for culturally sensitive training data and interpretation.

Addressing Algorithmic Bias Practically

For SMBs, tackling algorithmic bias in hiring Meaning ● Algorithmic bias in hiring for SMBs means automated systems unfairly favor/disfavor groups, hindering fair talent access and growth. is not about abandoning automation, but about adopting a more critical and data-driven approach to its implementation. It requires a commitment to transparency, ongoing monitoring, and a willingness to adjust algorithmic processes based on empirical evidence.

Auditing and Monitoring Algorithmic Outputs

Regularly audit the outputs of your hiring algorithms. This involves systematically analyzing the demographic composition of applicant pools at each stage of the hiring process, from initial screening to final selection. Compare these demographics to the overall applicant pool and to the demographics of your existing workforce.

Look for statistically significant deviations that might indicate bias. This isn’t a one-time fix; it’s an ongoing process of vigilance and adjustment.

Diversifying Training Data

If you are using customizable algorithms, actively work to diversify the training data. Ensure that the data used to train your algorithms reflects the diversity you seek in your workforce. This might involve incorporating data from successful employees from underrepresented groups, or even intentionally oversampling data from these groups to counteract historical biases in existing datasets. This isn’t about data manipulation; it’s about actively shaping the algorithm to promote fairness and inclusion.

Human Oversight and Intervention

Algorithms should augment, not replace, human judgment in hiring. Implement processes that ensure human reviewers have the final say in hiring decisions, especially at critical stages. Train your hiring managers to be aware of potential algorithmic biases and to critically evaluate algorithm-generated recommendations.

Human oversight acts as a crucial safeguard against unintended discriminatory outcomes. This isn’t about distrusting algorithms; it’s about recognizing their limitations and leveraging human expertise to ensure fairness.

Transparency with Candidates

Be transparent with job applicants about your use of algorithmic tools in the hiring process. Explain how these tools are used and what data they consider. Transparency builds trust and allows candidates to understand the process, even if they are not ultimately selected.

It also creates an environment of accountability, encouraging you to use these tools responsibly. This isn’t about revealing trade secrets; it’s about ethical and responsible use of technology in hiring.

Continuous Improvement and Adaptation

Algorithmic bias is not a static problem; it evolves as data changes and societal norms shift. Commit to continuous improvement Meaning ● Ongoing, incremental improvements focused on agility and value for SMB success. and adaptation of your hiring algorithms. Regularly review performance data, solicit feedback from hiring managers and employees, and be prepared to refine your algorithmic processes to mitigate newly identified biases. This isn’t about perfection; it’s about a commitment to ongoing progress towards fairer and more equitable hiring practices.

By diligently monitoring business data, actively addressing data biases, and maintaining human oversight, SMBs can harness the efficiency of algorithmic hiring tools while mitigating the risk of perpetuating unfair hiring practices. It’s a journey towards a more equitable and effective recruitment process, one data point at a time.

Navigating Algorithmic Hiring Pitfalls

While large corporations grapple with public scrutiny over AI ethics, small to medium businesses often operate under the radar, yet the algorithms they deploy in hiring, though less sophisticated, can still subtly undermine diversity and inclusion Meaning ● Diversity & Inclusion for SMBs: Strategic imperative for agility, innovation, and long-term resilience in a diverse world. goals, often manifesting in data patterns overlooked in the daily rush.

Beyond Surface Metrics Deeper Data Analysis

For SMBs moving beyond basic adoption of algorithmic hiring tools, understanding bias requires digging deeper into the data. Surface-level metrics like overall demographic representation are insufficient. A more granular analysis of various business data points Meaning ● Quantifiable and qualifiable information SMBs analyze to understand operations, performance, and market, driving informed decisions and growth. is essential to uncover the subtle ways algorithmic bias can manifest and impact hiring outcomes.

Advanced Data Indicators of Algorithmic Bias

Intermediate-level analysis moves beyond simple demographic skews and examines more nuanced data patterns that reveal algorithmic bias. These indicators often require a more sophisticated understanding of statistical analysis and business intelligence tools.

Differential Impact Across Job Roles

Algorithmic bias might not be uniform across all job roles within an SMB. Analyze hiring data separately for different job categories (e.g., technical roles, sales roles, administrative roles). It’s possible that an algorithm exhibits bias in selecting candidates for one type of role but not another. This differential impact can be masked when looking at aggregate data across the entire company.

For example, an algorithm trained primarily on data from the sales department, which historically might have been male-dominated, could inadvertently disadvantage female applicants for sales positions, while showing no bias in hiring for administrative roles. Disaggregating hiring data by job role provides a more precise picture of where bias is occurring.

Algorithm Confidence Scores and Demographic Correlation

Many algorithmic hiring tools assign confidence scores to candidates, indicating the algorithm’s certainty in its assessment. Analyze whether these confidence scores correlate with demographic attributes. If you find that the algorithm consistently assigns lower confidence scores to candidates from certain demographic groups, even when their qualifications are comparable to those of higher-scoring candidates from other groups, it suggests potential bias.

This analysis requires access to the raw output data from the algorithmic tool, including confidence scores, which might not be readily available in standard reports. Examining the distribution of confidence scores across demographics can reveal subtle patterns of algorithmic preference or prejudice.

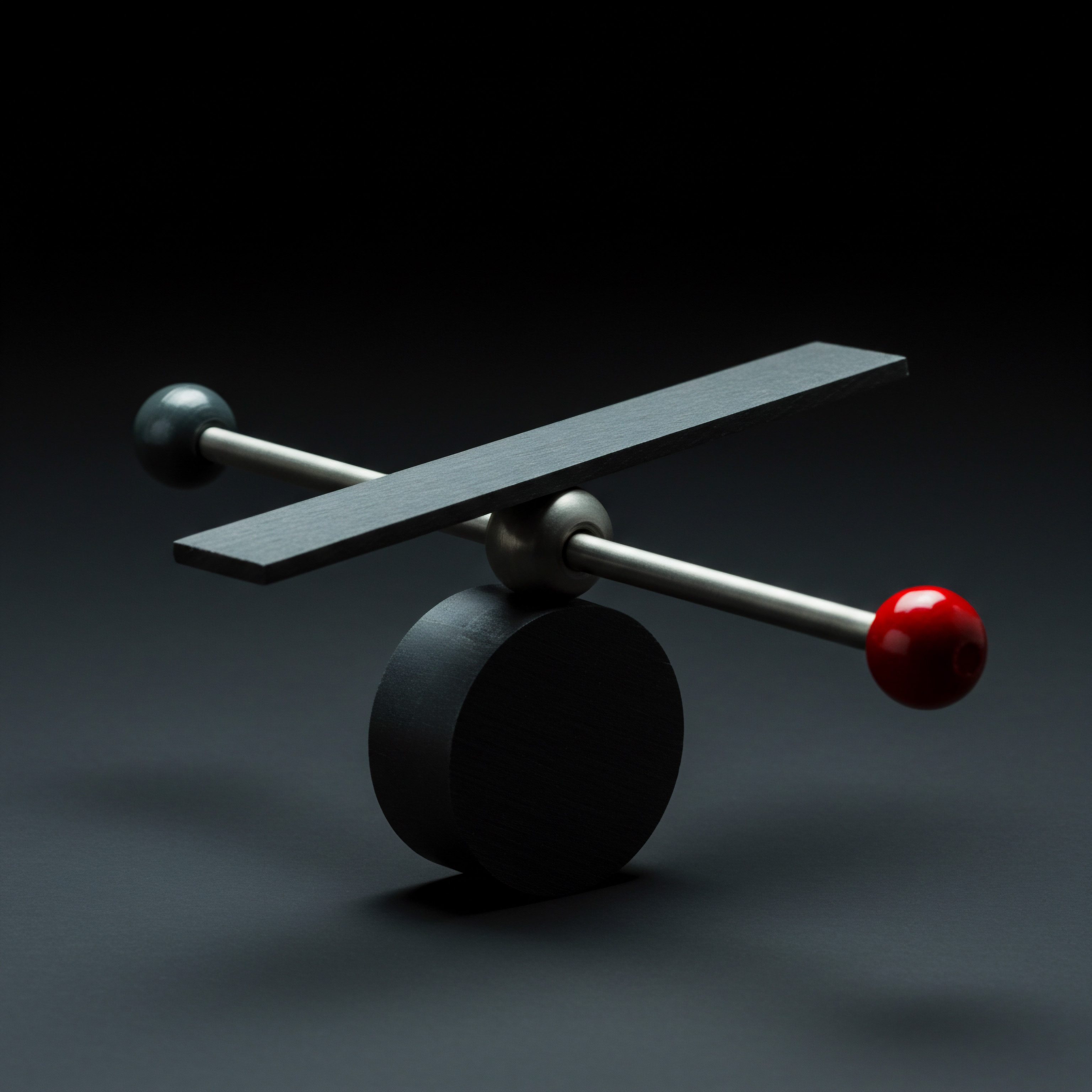

Confidence score disparities, when correlated with demographics, can indicate algorithmic preference patterns needing further investigation.

Time-To-Hire and Demographic Disparities

Time-to-hire, a key metric for recruitment efficiency, can also reveal algorithmic bias. Analyze whether there are statistically significant differences in time-to-hire for candidates from different demographic groups who are ultimately hired through algorithmic processes. If candidates from certain demographics consistently experience longer hiring cycles, it could indicate that the algorithm is creating unnecessary hurdles or delays for these groups.

This might manifest as candidates from underrepresented groups being subjected to more rounds of algorithmic screening or experiencing delays in moving to human review stages. Tracking time-to-hire by demographic group provides insights into potential algorithmic bottlenecks and unequal treatment.

Attrition Rates and Algorithmic Hiring Cohorts

Long-term data on employee attrition can also expose algorithmic bias. Compare attrition rates between employees hired through algorithmic processes and those hired through traditional methods, broken down by demographic groups. If employees from certain demographics hired algorithmically exhibit higher attrition rates, it could suggest that the algorithm is not accurately assessing long-term fit or is selecting candidates who are less likely to thrive in the company culture.

This could be due to the algorithm prioritizing short-term metrics or overlooking factors crucial for long-term employee satisfaction and retention for certain demographic groups. Analyzing attrition data provides a delayed but crucial indicator of algorithmic impact on workforce diversity and inclusion.

Feedback Loops and Bias Amplification

Algorithmic hiring systems often incorporate feedback loops, where data from past hiring decisions and employee performance is used to refine the algorithm’s future performance. However, if the initial data contains biases, these feedback loops Meaning ● Feedback loops are cyclical processes where business outputs become inputs, shaping future actions for SMB growth and adaptation. can amplify and perpetuate those biases over time. For example, if an algorithm initially under-selects candidates from a particular demographic group, the performance data it subsequently receives will be skewed towards the over-represented group, further reinforcing the algorithm’s biased tendencies. Understanding these feedback loops is crucial for mitigating long-term bias.

Analyze how the algorithm is being retrained and whether the retraining process itself is susceptible to perpetuating existing biases. This requires a deep dive into the algorithm’s architecture and retraining mechanisms.

Strategic Business Responses to Algorithmic Bias

Addressing algorithmic bias at the intermediate level requires a more strategic and data-driven approach, moving beyond reactive measures to proactive prevention and mitigation strategies. SMBs need to integrate bias detection and mitigation into their core hiring processes and corporate strategy.

Establishing Bias Review Boards

Create a dedicated bias review board composed of diverse stakeholders from HR, IT, and other relevant departments. This board should be responsible for overseeing the implementation and monitoring of algorithmic hiring tools, conducting regular bias audits, and recommending corrective actions. The board should have the authority to challenge algorithmic outputs and advocate for fairer hiring practices. This establishes institutional accountability for algorithmic fairness.

Developing Algorithmic Fairness Metrics

Define specific, measurable metrics for algorithmic fairness. These metrics should go beyond simple demographic representation and incorporate indicators like differential impact, confidence score disparities, and time-to-hire discrepancies. Regularly track these metrics and set targets for improvement.

Publicly reporting on these metrics, even internally, can increase transparency and accountability. This transforms algorithmic fairness Meaning ● Ensuring impartial automated decisions in SMBs to foster trust and equitable business growth. from an abstract concept into a quantifiable business objective.

Implementing Blind Resume Reviews (Even Algorithmically)

Extend the concept of blind resume reviews to algorithmic screening processes. Configure algorithms to initially screen resumes without considering demographic information like names, gender indicators, or zip codes. Demographic data can be introduced at later stages, if necessary, but only after the initial pool of qualified candidates has been identified based on skills and experience alone.

This minimizes the influence of demographic bias in the early stages of screening. This applies the principles of blind auditions to the algorithmic realm.

Algorithmic Explainability and Transparency Initiatives

Demand algorithmic explainability from vendors of hiring tools. Understand how the algorithms work, what data they use, and what factors drive their decisions. Transparency is crucial for identifying and mitigating bias. If vendors cannot provide sufficient explainability, consider alternative tools or develop in-house solutions where you have greater control and visibility.

Push for algorithmic transparency as a key vendor selection criterion. This empowers SMBs to understand and control the technology they use.

Continuous Algorithmic Refinement and Ethical Audits

Commit to continuous algorithmic refinement based on ongoing data analysis Meaning ● Data analysis, in the context of Small and Medium-sized Businesses (SMBs), represents a critical business process of inspecting, cleansing, transforming, and modeling data with the goal of discovering useful information, informing conclusions, and supporting strategic decision-making. and ethical audits. Regularly review algorithm performance, identify and address biases, and update algorithms to reflect evolving fairness standards and business goals. Engage external ethical auditors to provide independent assessments of your algorithmic hiring processes.

This fosters a culture of continuous improvement and ethical responsibility in algorithmic hiring. Algorithmic fairness is not a destination but a journey of continuous adaptation and refinement.

By adopting these intermediate-level strategies, SMBs can move beyond simply using algorithms for efficiency and begin to proactively shape them as tools for promoting fairness, diversity, and inclusion in their hiring practices. It’s about embedding ethical considerations into the very fabric of their automated recruitment processes.

Strategic Algorithmic Bias Mitigation and Competitive Advantage

Multinational corporations now face intense regulatory scrutiny and brand damage from biased AI, yet for SMBs, algorithmic bias in hiring represents a less discussed but equally potent threat, not only ethically, but strategically, potentially eroding competitive edge through homogenous talent pools and missed innovation opportunities, data points often buried beneath daily operational concerns.

Holistic Business Data Ecosystems for Bias Detection

At an advanced level, addressing algorithmic bias in SMB hiring transcends isolated data points and requires a holistic view of the entire business data ecosystem. Bias detection becomes integrated with broader business intelligence and strategic decision-making, leveraging sophisticated analytical frameworks and cross-functional data integration.

Sophisticated Data Signals of Algorithmic Bias

Advanced analysis moves beyond individual metrics and examines complex interactions and systemic patterns within the business data landscape to uncover subtle and deeply embedded algorithmic biases. These signals often require advanced statistical modeling, machine learning techniques, and a multidisciplinary approach.

Intersectionality of Bias and Data Silos

Bias does not operate in isolation; it is often intersectional, manifesting differently for individuals with multiple marginalized identities. Analyze hiring data through an intersectional lens, examining how algorithmic bias affects individuals at the intersection of different demographic categories (e.g., women of color, older LGBTQ+ individuals). This requires breaking down data silos and integrating data from HR, diversity and inclusion initiatives, and employee resource groups to gain a comprehensive understanding of intersectional bias.

Standard demographic categories alone are insufficient; intersectional analysis reveals the complex realities of bias. This moves beyond simple demographic breakdowns to capture the nuanced experiences of diverse individuals.

Counterfactual Fairness and Algorithmic Decision Paths

Explore the concept of counterfactual fairness in algorithmic hiring. This involves asking ● “Would a different hiring decision have been made if a candidate’s demographic attribute were different, while holding all other qualifications constant?” Analyzing algorithmic decision paths and simulating counterfactual scenarios can reveal whether demographic attributes are unduly influencing hiring outcomes. This requires advanced algorithmic auditing techniques and potentially the development of “what-if” models to assess the sensitivity of hiring decisions to demographic variables.

Counterfactual analysis goes beyond correlation to explore causality in algorithmic bias. This probes the decision-making logic of algorithms to identify points of demographic influence.

Counterfactual fairness analysis examines algorithmic decision paths to reveal if demographic attributes unduly influence hiring outcomes, ensuring equitable processes.

Dynamic Bias Drift and Longitudinal Data Analysis

Algorithmic bias is not static; it can drift and evolve over time as training data changes and societal norms shift. Implement longitudinal data analysis Meaning ● Longitudinal Data Analysis for SMBs is the strategic examination of data over time to reveal trends, predict outcomes, and drive sustainable growth. to track algorithmic performance and bias levels over extended periods. Monitor for “bias drift,” where an algorithm initially appears fair but gradually becomes biased over time due to feedback loops or evolving data patterns. This requires establishing robust data pipelines for continuous monitoring and analysis of algorithmic hiring outcomes.

Static bias audits are insufficient; dynamic bias drift requires ongoing vigilance. Longitudinal analysis captures the evolving nature of algorithmic bias and ensures sustained fairness.

Algorithmic Bias in Promotion and Internal Mobility

Extend bias analysis beyond initial hiring to examine algorithmic bias in promotion and internal mobility processes. Many SMBs use algorithms for talent management, including identifying candidates for promotion or internal transfers. Analyze data on promotion rates, internal mobility patterns, and performance evaluations to identify potential algorithmic bias in these processes. Bias can be amplified as employees progress through their careers if algorithms perpetuate initial disadvantages.

Algorithmic fairness must extend beyond entry-level hiring to encompass the entire employee lifecycle. This ensures equitable opportunities for career advancement and prevents bias from compounding over time.

Integrating External Data and Societal Bias Benchmarking

Integrate external data sources, such as labor market statistics, demographic benchmarks, and societal bias indices, into your algorithmic bias analysis. Compare your internal hiring data to external benchmarks to assess whether your algorithms are reflecting or mitigating broader societal biases. For example, compare the demographic representation in your algorithmically hired workforce to the demographic composition of the available talent pool in your industry and geographic region. This provides external validation and context for your internal bias analysis.

Internal data alone is insufficient; external benchmarks provide crucial context. Societal bias benchmarking grounds algorithmic fairness efforts in broader societal realities.

Strategic Implementation for Competitive Advantage

At the advanced level, algorithmic bias mitigation Meaning ● Mitigating unfair outcomes from algorithms in SMBs to ensure equitable and ethical business practices. becomes a strategic differentiator for SMBs, enhancing their competitive advantage Meaning ● SMB Competitive Advantage: Ecosystem-embedded, hyper-personalized value, sustained by strategic automation, ensuring resilience & impact. by fostering innovation, attracting diverse talent, and building a more resilient and adaptable workforce. It moves from a compliance issue to a strategic imperative.

Developing Algorithmic Fairness as a Core Business Value

Embed algorithmic fairness as a core business value, integrated into the company’s mission, values, and strategic objectives. Communicate this commitment both internally and externally to attract and retain talent who value ethical and equitable practices. Algorithmic fairness becomes a brand differentiator and a source of competitive advantage. This elevates algorithmic fairness from a technical concern to a fundamental aspect of corporate identity.

Investing in Algorithmic Fairness Research and Development

Allocate resources to research and development in algorithmic fairness. Partner with academic institutions, AI ethics experts, and technology vendors to develop and implement cutting-edge bias mitigation Meaning ● Bias Mitigation, within the landscape of SMB growth strategies, automation adoption, and successful implementation initiatives, denotes the proactive identification and strategic reduction of prejudiced outcomes and unfair algorithmic decision-making inherent within business processes and automated systems. techniques. Invest in training and upskilling your workforce in algorithmic fairness principles and best practices.

This positions your SMB at the forefront of ethical AI Meaning ● Ethical AI for SMBs means using AI responsibly to build trust, ensure fairness, and drive sustainable growth, not just for profit but for societal benefit. adoption and innovation. Algorithmic fairness innovation becomes a strategic investment in future competitiveness.

Building Diverse and Inclusive Algorithmic Development Teams

Ensure that the teams developing and implementing your algorithmic hiring tools are diverse and inclusive. Diverse teams are more likely to identify and mitigate potential biases in algorithms, as they bring a wider range of perspectives and experiences to the development process. Diversity in algorithmic development is not just ethical; it is essential for building fairer and more effective algorithms.

Diversity becomes a critical input for algorithmic fairness. Inclusive teams are less prone to overlooking biases that might be invisible to homogenous groups.

Advocating for Industry-Wide Algorithmic Fairness Standards

Actively participate in industry initiatives and collaborations to develop and promote industry-wide standards for algorithmic fairness in hiring. Share your best practices and lessons learned with other SMBs and contribute to the development of ethical guidelines and regulatory frameworks. Collective action is essential for driving systemic change and creating a level playing field for all businesses.

Industry-wide standards amplify the impact of individual SMB efforts. Collaboration accelerates the adoption of ethical algorithmic practices across the business landscape.

Transparency and Accountability as Competitive Differentiators

Embrace radical transparency and accountability in your algorithmic hiring practices. Publicly report on your algorithmic fairness metrics, bias audits, and mitigation efforts. Be transparent with job applicants and employees about how algorithms are used in hiring and talent management. Transparency builds trust and positions your SMB as a leader in ethical AI adoption.

Transparency becomes a competitive advantage in attracting ethically conscious talent and customers. Accountability reinforces commitment to algorithmic fairness and builds stakeholder confidence.

By embracing these advanced strategies, SMBs can transform algorithmic bias mitigation from a reactive risk management exercise into a proactive driver of competitive advantage. It’s about building a future where ethical AI not only ensures fairness but also fuels innovation, growth, and long-term business success in a rapidly evolving world.

References

- O’Neil, Cathy. Weapons of Math Destruction ● How Big Data Increases Inequality and Threatens Democracy. Crown, 2016.

- Noble, Safiya Umoja. Algorithms of Oppression ● How Search Engines Reinforce Racism. NYU Press, 2018.

- Eubanks, Virginia. Automating Inequality ● How High-Tech Tools Profile, Police, and Punish the Poor. St. Martin’s Press, 2018.

- Barocas, Solon, et al., editors. Fairness and Machine Learning ● Limitations and Opportunities. Cambridge University Press, 2023.

Reflection

Perhaps the most unsettling data point of algorithmic bias in SMB hiring is not found in spreadsheets or dashboards, but in the qualitative feedback absent from the process. The silence of voices never heard, the potential contributions never considered, the innovation stifled by homogeneity ● these are the intangible costs of unchecked algorithmic processes, a silent drain on the dynamism and adaptability that should define the small business sector. True competitive advantage may lie not in perfectly calibrated algorithms, but in the messy, unpredictable, and ultimately human act of recognizing potential beyond the metrics, a skill algorithms, in their current form, are fundamentally incapable of replicating.

Skewed applicant pools and performance data reveal algorithmic bias in SMB hiring, demanding proactive data audits and human oversight.

Explore

What Data Points Indicate Hiring Algorithm Bias?

How Does Algorithmic Bias Affect SMB Growth?

Why Is Algorithmic Fairness Important for SMB Automation?